- #Kiwix zim file dump how to

- #Kiwix zim file dump archive

- #Kiwix zim file dump code

- #Kiwix zim file dump download

#Kiwix zim file dump download

Bulk download is (as of September 2013) available from mirrors but not offered directly from Wikimedia servers. Images and other uploaded media are available from mirrors in addition to being served directly from Wikimedia servers. Where are the uploaded files (image, audio, video, etc.)? These dumps are also available from the Internet Archive. simple, nostalgia) exist, with the same structure.

#Kiwix zim file dump code

The sub-directories are named for the language code and the appropriate project. org directory you will find the latest SQL and XML dumps for the projects, not just English.

#Kiwix zim file dump how to

See for info about such multistream files and about how to decompress them with python see also and related files for an old working toy. You could then either bzip2 decompress it or use bzip2recover, and search the first file for the article ID.

#Kiwix zim file dump archive

The first field of this index is the number of bytes to seek into the compressed archive 2, the second is the article ID, the third the article title.Ĭut a small part out of the archive with dd using the byte offset as found in the index. Each separate 'stream' (or really, file) in the multistream dump contains 100 pages, except possibly the last one.įor multistream, you can get an index file, 2. NOTE THAT the multistream dump file contains multiple bz2 'streams' (bz2 header, body, footer) concatenated together into one file, in contrast to the vanilla file which contains one stream. And it will unpack to ~5-10 times its original size. You might be tempted to get the smaller non-multistream archive, but this will be useless if you don't unpack it.

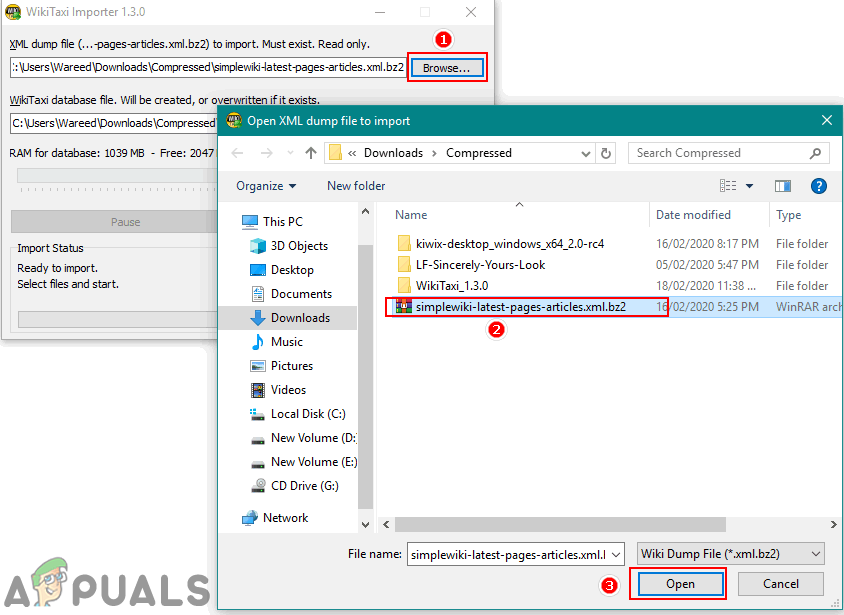

The only downside to multistream is that it is marginally larger. Your reader should handle this for you, if your reader doesn't support it it will work anyway since multistream and non-multistream contain the same xml. But with multistream, it is possible to get an article from the archive without unpacking the whole thing. So if you unpack either, you get the same data. 2 and 2 both contain the same xml contents. GET THE MULTISTREAM VERSION! (and the corresponding index file, 2) To download a subset of the database in XML format, such as a specific category or a list of articles see: Special:Export, usage of which is described at Help:Export.

Go to Latest Dumps and look out for all the files that have 'pages-meta-history' in their name. Please only download these if you know you can cope with this quantity of data.

Some of the many ways to read Wikipedia while offline:

0 kommentar(er)

0 kommentar(er)